Imagine a world where your every wish and every desire is fulfilled in a matter of moments. Perhaps it’ll appear magically at your doorstep, or perhaps it’ll appear in the form of an ad too irresistible to ignore. Well, lucky you… Or are you truly fortunate?

Whether to study, write, or even think on your own, convenience has gradually seeped into everything. Yet, what we don’t realise is that not everything that is convenient is ethically correct.

Every swipe you make, and every reel you scroll past, might seem to be free of cost, but it is a part of a vast digital experiment — an invisible exchange where you are the product. Big Tech has used your behaviour, whether it’s lingering on a sad video, scrolling past a reel of a singing cat, or the tone you use when talking to your AI or Siri, to read emotions more fluently than you can understand. The devices designed to help us understand the world around us have seemingly begun to understand us better than we understand ourselves — a notion that may appear romantic, but is far from it.

The tech sector, perhaps, is one of the most competitive industries and has greatly evolved over the past hundred years, through which the tech giants have made them more “helpful” and as “understanding” as possible, which is just another way to say how it has handicapped our minds.

Thus, our phones track our steps to ensure “our health”. Our smartwatches can conveniently monitor our heart rate, stress levels, and sleep cycles, all for our wellness. Our feed becomes filled with social media gurus with mental health advice if we ever listen to a sad song. It makes me wonder why we even invest in modern medicine when these devices have taken charge of our health. But perhaps there is a catch to it.

Behind the comforting language of health and self-care lies the brutal truth: nothing and absolutely nothing is free in this world. The true cost of our convenience is our data and our privacy.

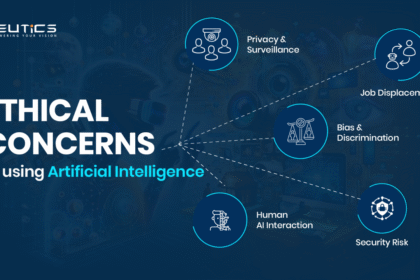

Do you ever wonder what happens when your mental health becomes a data asset? When your anxiety, your heartbreak, and your insomnia are reduced to algorithms which are pristinely packaged and sold to advertisers or insurers? It’s a subtle way of exploitation that we have knowingly accepted all because it allows us to doomscroll and numb ourselves so we can escape the reality we dread. The merging of surveillance capitalism with emotional vulnerability has turned empathy itself into a revenue stream — and it is harmful for us.

Even our struggles are no longer private. Mental health apps promise to understand, listen and heal us — all for $4.99 every month — yet some of them have been caught sharing data with a third party for marketing reasons. As per the mobile health and privacy: cross-sectional study, out of 20,991 mental health apps, 88% could access and potentially share our personal data with third parties.

Artificial intelligence companions and “therapy” bots, seemingly designed to soothe us, have sparked significant ethical concerns. One such alleged case is Adam Raine, a 16-year-old US citizen who sought ChatGPT to help him with his mental health. Yet, to his horror, the app instead provided him with explicit advice on how he could end his life. It proves that our growing dependence on digital empathy — which is carefully programmed to cater to our needs — comes with invisible dangers.

The unsettling part of this is that we no longer need to tell these companies how we feel — they already know. Every search, every reel we watch; they monitor it all. Our devices can detect our sadness even from the way we type, the stress in our voice, or the relentless and restless late-night scrolling.

And yet, as our devices become more “empathetic”, we humans grow less so. We have finally commodified our emotions and have made loneliness a pandemic, even though the world consists of over 8 billion individuals. What began as a revolution to connect us all has now transformed into systems that consume us, leaving behind only our shallow existence.

Perhaps the real question isn’t whether technology can understand us — but whether we still understand ourselves without it. We have developed a culture where talking to people during hard times is considered a “burden” or “cringe.” This compromises our aura and may lead us to feel that we are presenting ourselves as weak. How dare you rob people closest to you of witnessing a part of you? That is the beauty of humans. When you choose to love someone, they are always worth the inconvenience.

So, next time you are having a difficult time, LET THE PEOPLE AROUND YOU KNOW. A single conversation with them can significantly change your outlook on life and perhaps foster a more profound relationship with them.

Because the new anxiety is not just about being watched constantly.

It’s about being known too deeply, not by those who care, but by those who commercialise us.