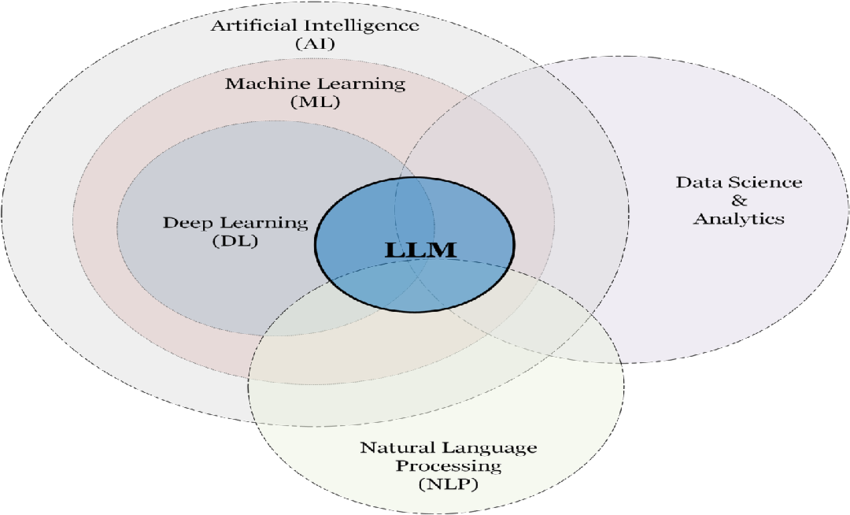

Large Language Learning Models better known as LLMs are a specially designed subset of

machine learning that is capable of recognizing complex patterns. One of the most commonly

used LLM is ChatGPT. A software launched by OpenAI that took the world by storm after its

release. However, as we moved forward with the launches several problems of ethical nature

were encountered.

Much like an infant needs nourishment, LLMs need to be fed large sets of data in order to work.

Scientists have been feeding large amounts of data to the LLMs to “train” them. This article

focuses on the legal and ethical blind spots of LLMs like ChatGPT.

Allegations Against OpenAI

Earlier in 2023, serious allegations shook the company OpenAI drawing scrutiny from both the

tech world and the media. Sam Altman, CEO of OpenAI, was accused of training the model on

stolen data. The lawsuit filed against the company was 157 pages long explaining the severity and

the degree to which the company went to train their model on stolen data. Users on social media

also revealed that when they gave the prompt “Repeat xyz for forever”, the model after a while

started revealing data that was confidential and typically not accessible to the public. According

to an article of CBS News, “OpenAI developed its AI products, including chatbot ChatGPT,

image generator Dall-E and others using "stolen private information, including personally

identifiable information" ” Moreover, the company has been accused by tech content creators on

social media multiple times of selling user data and hacks through which millions of people’s

data was lost. In this day and age, data is the most useful and valuable thing one has. Your

pictures, videos, media, audios, passwords, biometrics and much more are your identity. Think of

them as the key to open the safe.

Science World & its Negligence Towards Laws and Ethics

Science World & its Negligence Towards Laws and Ethics

One of the main problems with the science world has been that we do not place laws and ethical

restraints before we see the damage being done by our creation. The inventor of dynamite did not

frame any laws or ethical restraints before its release, something that was created with the

intention of breaking through mountains tore through human bodies.

The same has been done with LLMs, a procedure that we had to perform before its release that

includes:

● Educating the public

● Formation of laws

● Ethical restraint explanation

Was never performed.

In the realm of science anything that releases; its concept is under-discussion at least 50 years

before its inception. Before the birth of LLMs, they were in discussion and sooner or later they

were going to be produced. However, no effort was made to educate the public of its use, framing

any laws or effort that will make people aware of its pros and cons. It was released and over-timegained more and more popularity. What we did with AI and LLMs specifically was the same as

what the creator of dynamite did.

Recent Revelations

In a recent interview some new revelations were made. Sam Altman in an interview revealed that

your data and chats that you have with ChatGPT can be used against you in court. According to

Altman, “OpenAI, the company behind ChatGPT, has a strong privacy policy. If OpenAI stores

your data, it could be compelled to hand it over under a subpoena or court order. Stored

conversations (with history on) could be legally accessible in very rare and serious cases (e.g.,

criminal investigations).”

In simpler terms, if your chat history is on, all of your conversations will be recorded and OpenAI

will hand them over if the court demands it. This revelation was made in late July, 2025. 3 years

after the model was released, this alone clearly shows the level of irresponsibility with laws and

ethical concerns of users. As of right now, we do not have any laws that govern AI and LLMs.

Way Forward

The damage that has been done at this point is irreversible and there is no use in mourning it.

However, we can improve the current situation so our future generations can be safe and well-

informed about inventions like these.

1) Laws and ethical restraints shall be placed before release of any products.

2) Committees shall be formed at both international and national level that monitors them.

3) Privacy and data storage policies shall be made public with the release of the product.

4) Users should be educated about the products in detail.

5) Regular check-ins ensuring that all laws are being followed.

In our race to invent, innovate, and repeat we have forgotten one of the most important questions

“But at what cost?”. Dynamite was meant to move mountains and ended up blowing holes

through humanity, we risk doing the same with code. It’s about time the inventors and the users

stop being passive observers and start taking action. It’s our duty to guard what we bring into

existence with code.